Google Data Commons: Unlocking Real-World Data for AI

Google is transforming its enormous public dataset collection into a powerful goldmine for artificial intelligence. With the release of the Real-World Data Commons Model Context Protocol Server, researchers, programmers, and AI builders now gain the ability to access real-world information using everyday language and create more reliable training pipelines.

The Data Commons project itself started in 2018, gathering data from government surveys, administrative institutions, and international bodies such as the United Nations. This new launch extends accessibility because the data can now be queried through natural language, enabling developers to directly integrate it into AI tools, assistants, or applications without additional complexity.

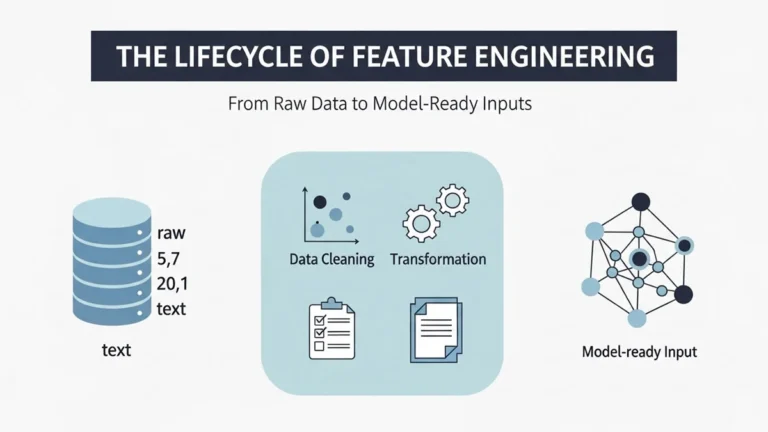

AI models frequently rely on noisy or unverified internet material. When relevant sources are missing, they often improvise by filling gaps with assumed details, a process widely known as hallucination. This causes significant obstacles for companies attempting to fine-tune AI for business-specific or mission-critical scenarios. For deeper insights into improving AI pipelines, check out The Lifecycle of Feature Engineering: From Raw Data to Model-Ready Inputs.

Large, high-quality structured datasets are essential, but they are usually hard to locate or integrate. By making its Model Context Protocol Server publicly available, Google seeks to address both the scarcity of reliable information and the integration challenge faced by AI practitioners worldwide.

The Bridge Between Real-World Data Commons and AI Models

The Data Commons platform provides access to valuable statistics ranging from census numbers and climate measurements to economic growth indicators. With the MCP Server, this information is made usable through natural language inputs.

That means AI agents can be trained or guided with factual information instead of depending solely on web-scraped content. This release brings a stronger foundation for grounding large language models in verifiable, structured knowledge drawn from recognized institutions.

Prem Ramaswami, who leads Google Data Commons, explained that the Model Context Protocol lets teams employ the intelligence of large language models to automatically pick the most appropriate dataset at the correct time.

Users no longer need to worry about modeling structures or navigating complicated APIs. Instead, AI does the work of selecting and aligning context while keeping data retrieval smooth and intuitive.

Understanding the Model Context Protocol

The Model Context Protocol, first introduced by Anthropic in late 2023, was designed as an open industry standard for AI data connectivity. It allows systems to access content from multiple sources, such as productivity applications, development environments, and business repositories.

The protocol delivers a common framework for interpreting contextual prompts across different services. Since its introduction, major technology organizations, including OpenAI, Microsoft, and Google, have adopted MCP to unify their approaches toward integrating models with reliable external data sources.

While many companies explored ways to connect MCP with proprietary models, Google’s team went further by focusing on making the protocol serve public knowledge through Data Commons.

Their investigation earlier this year resulted in a dedicated MCP Server that opens up the rich dataset library in a way developers and AI researchers can use immediately. The move is significant because it enables a wide community to tap into verified information at scale rather than depending on custom or closed solutions.

Partnership with the ONE Campaign

Google also collaborated with the ONE Campaign, a nonprofit group working to advance economic opportunity and health outcomes across Africa. Together, they created the One Data Agent, an AI solution that employs the MCP Server to surface millions of development statistics, financial records, and healthcare indicators in natural language form. This cooperation highlights the practical value of combining open standards with reliable datasets.

The ONE Campaign had previously tested a prototype version of MCP on a private server. That initiative sparked discussions with Google Data Commons, which ultimately motivated Ramaswami’s team to design and release a full-fledged public MCP Server in mid-2025. This turning point shows how nonprofit use cases can influence the direction of technological infrastructure, pushing major players like Google to broaden accessibility for all sectors.

Open Tools and Developer Access

Importantly, the Data Commons MCP Server is not restricted to Google’s own ecosystem. Because MCP is an open standard, the server can operate with any large language model. Developers can experiment with multiple options, and also benefit from learning about 7 Python Statistics Tools That Data Scientists Use to strengthen their workflow. A sample agent is distributed within the Agent Development Kit through a Colab notebook, allowing fast testing.

In addition, the server can be reached via the Gemini command line tool or through any MCP-compatible client using a Python package available on PyPI. To help developers adopt it smoothly, example code and usage guidelines have also been posted on GitHub.

This open approach signals that Google is not simply releasing another proprietary framework. Instead, it supports a growing ecosystem where many AI platforms and developers can build advanced solutions grounded in shared real-world data. That combination of standardization and open access makes it easier for businesses, researchers, and independent developers to experiment with building responsible AI.

Why This Matters for the AI Ecosystem

The AI industry has long been criticized for relying too heavily on noisy, biased, and unverified online content. By introducing a structured bridge between public data and AI training pipelines, Google is addressing a critical weakness. Reliable data is fundamental for reducing hallucinations, ensuring transparency, and improving model accuracy. Enterprises working in healthcare, finance, climate analysis, or government policy will particularly benefit because they require trustworthy datasets for sensitive tasks.

Furthermore, the integration of MCP with Data Commons shows the potential of collaboration across organizations. Instead of each company reinventing isolated data pipelines, an open protocol invites interoperability. This encourages both competition and cooperation, resulting in faster progress and wider adoption of responsible AI standards. It also ensures that AI development remains grounded in factual knowledge rather than speculation.

The Road Ahead

Looking forward, the MCP Server for Data Commons could serve as a blueprint for how other large institutions make their data accessible. Universities, research centers, and government agencies might adopt similar strategies to share verified datasets with the AI community. This shift could accelerate innovation in fields ranging from climate change modeling and epidemiology to education and urban planning.

Google’s decision to open Data Commons through MCP demonstrates how tech giants can use their scale for public good. By reducing barriers to high-quality information, the company is enabling more reliable and transparent AI applications. It also underscores a growing recognition across the industry that the future of artificial intelligence will depend less on sheer model size and more on grounding systems with credible data.

Explore more tech insights on our homepage: TechDives