7 Tips for Building Machine Learning Models That Are Actually Useful

Developing Machine Learning Models that genuinely resolve practical challenges is not simply about hitting impressive accuracy numbers on evaluation datasets. It’s about creating systems that operate reliably in production environments and consistently support real-world use cases.

This article outlines seven actionable strategies to ensure your models generate consistent business value instead of just academic benchmarks. Let’s dive in!

1. Begin With the Problem, Not the Technique

One of the biggest mistakes in machine learning projects is prioritizing algorithms before deeply understanding the problem. Rather than jumping into coding neural networks, boosting frameworks, or hyperparameter searches, spend meaningful time with the stakeholders who will actually apply your model.

Practical approach:

- Observe ongoing workflows for at least several days

- Calculate the monetary trade-offs of false negatives versus positives

- Map out every stage your model will integrate into

- Define what “acceptable” performance realistically means for the business

For example, a fraud detection system with 95% detection but 20% false alerts might look mathematically brilliant, yet it becomes operationally inefficient. Often, the most impactful model is the simplest one that delivers measurable results.

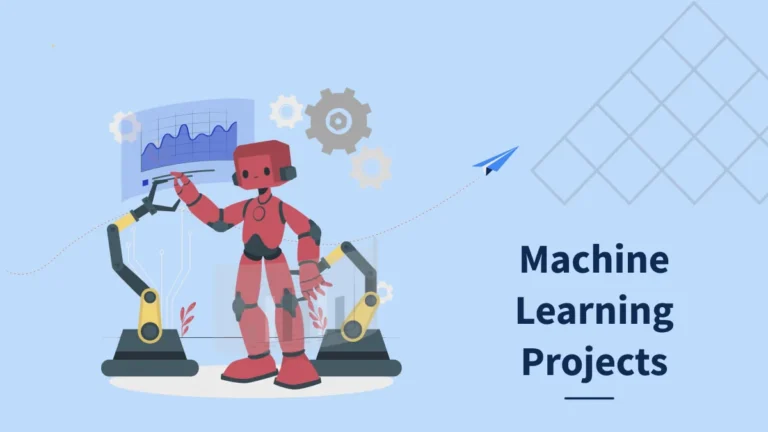

2. Treat Data Quality as Your Core Advantage

A model’s success directly mirrors the strength of its dataset. Yet, most practitioners spend 80% of their energy on algorithms while neglecting data quality. Invert that ratio. High-quality, unbiased, and consistent datasets outperform sophisticated architectures trained on poor inputs every time.

Best practices:

- Implement automated data validation across every pipeline execution

- Monitor data drift in production with continuous metrics

- Keep records of data transformations and original sources

- Trigger alerts whenever critical statistical measures shift

Remember: a basic linear regression with reliable information can outperform deep learning systems trained on inconsistent or outdated inputs. Treat your data infrastructure as mission-critical because it truly is.

3. Build for Interpretability From the Start

While “black box” approaches may seem appealing during experimentation, real-world deployments demand interpretability. When an impactful misprediction occurs, you must explain why and how to avoid it next time.

Ways to improve interpretability:

- Apply SHAP or LIME for transparent prediction explanations

- Use model-agnostic tools that operate across algorithms

- Compare against interpretable baselines like decision trees

- Document which features drive outputs in plain language

This is not only for compliance or debugging. Clear explanations strengthen trust with business partners and reveal new domain insights. A transparent model can be refined systematically and responsibly.

4. Validate Using Real-World Conditions, Not Just Datasets

Conventional train/validation/test splits overlook a critical factor: whether the model functions under shifting conditions. Production deployment brings data distribution drift, edge cases, and adversarial challenges unseen in training.

Better validation strategies:

- Evaluate performance on various regions, time periods, or demographics

- Simulate extreme edge cases to reveal weaknesses

- Apply adversarial validation to uncover dataset mismatches

- Design stress tests that push models beyond standard ranges

If your model performs well on last month’s set but fails on today’s data streams, it is not truly reliable. Robustness must be built into validation from day one.

5. Establish Monitoring Before Deployment

Many teams mistakenly delay monitoring until after release, but models degrade silently. By the time issues show up in KPIs, the damage may already be substantial.

Key monitoring practices:

- Track input distributions continuously to catch drift early

- Assess prediction confidence and highlight anomalies

- Measure and log accuracy metrics over time

- Correlate model outcomes with actual business indicators

- Send automatic alerts when irregular behavior emerges

Build monitoring tools during development, not afterward. Your monitoring should notify you of problems long before users feel the impact.

6. Plan for Continuous Updates and Retraining

Models naturally lose effectiveness. User actions evolve, markets shift, and data patterns drift. A model that excels today could degrade tomorrow unless retraining processes are systematized.

Sustainable updating habits:

- Automate feature engineering and pipeline refreshes

- Schedule retraining whenever performance dips below thresholds

- Test updated versions with A/B frameworks

- Apply version control across models, datasets, and codebases

- Prepare for incremental tuning as well as full rebuilds

The objective isn’t perfection it’s adaptability. Robust ML systems should evolve with changing circumstances while maintaining stability.

7. Optimize for Measurable Business Results, Not Just Scores

Metrics like accuracy or recall are useful but not the final goal. The most effective Machine Learning Models maximize tangible outcomes: revenue growth, cost reductions, customer loyalty, or faster insights.

How to align with business:

- Define success as measurable business benefits

- Apply cost-sensitive methods when error types carry unequal costs

- Track ROI of models regularly

- Build feedback loops connecting predictions to organizational KPIs

A model that improves operations by 10% at 85% accuracy is more valuable than a 99% accurate system with no business impact. Always prioritize measurable value creation over technical benchmarks.

7 Tips for Building Machine Learning Models: Wrapping Up

Developing meaningful Machine Learning Models demands thinking beyond code to the complete lifecycle. Start with problem discovery, strengthen data quality, design for interpretability, validate against reality, and implement proactive monitoring.

The most successful practitioners aren’t always those chasing cutting-edge algorithms but those delivering consistent business value with models that perform reliably in production.

Ultimately, a clear, explainable, and adaptive model that fits business needs will always be more impactful than a complicated one that collapses outside lab conditions.